The $75M Opportunity: Consolidating Canada’s AI Spending

Do we really need twelve translation tools for two official languages?

The Canadian Government is all about efficiency, yet it’s sitting on $75 million in wasted redundancies.

Last week I shared some promising tools from the Canadian Government’s federal AI Register. This week I’m looking at why the registry isn’t all roses.

We have twelve different tools for translation. Seven more for transcription. And several separate Copilot contracts.

Carney is learning what it’s like to be an IT manager. The software bloat adds up fast when no one's watching.

The good news? The AI Register is basically an internal IT audit.

The bad? Anyone who's survived one of those knows how the story ends. Cuts.

If the government is serious about efficiency, here’s how I expect them to sort through this…

3 Stages of Consolidation

Stage 1: Full Consolidation

These redundancies exist because of coordination failures, not legitimate constraints.

1. Translation Services

12 separate translation tools across departments

No legal reason different departments need different translation engines

Recommendation: Pick one and make it the default. Security is the common excuse for fragmentation (and we do need a Protected B translator). But the registry shows that both PSPC (GCtranslate) and Justice (JUStranslate) have already built compliant tools.

Departments should adopt GCtranslate as the secure interface, routing specific requests to specialized back-end models (like NRC’s Parliamentary MT) only when necessary.

2. M365 Copilot Licensing

10+ departments licensing separately

No security or legal reason for separate licenses

Recommendation: TBS or SSC negotiates a single M365 Copilot contract with volume pricing. Microsoft offers enterprise agreements specifically for this.The database shows “Pilot” or limited deployment status for nearly every department.

This is the right time to consolidate. If they all go to production separately, the government loses massive leverage in the negotiation with Microsoft.

3. IT Service Desk Chatbots

9+ implementations

IT support questions are similar across departments. The only difference is the knowledge base content.

Recommendation: Build one chatbot template on CANChat and let departments plug in their own knowledge base. Same engine, different answers.

4. Transcription Services

5+ implementations all using similar tech like Whisper

Transcription of meetings is not department-specific

Recommendation: SSC should package up the RCMP’s Echo and offer it as transcription-as-a-service. The hard work is already done.

5. Media Monitoring

6+ implementations using different vendors for the same public data

All monitoring public news sources

Recommendation: GAC already built Doc Cracker in-house. Scale it up or use it as the blueprint for a government-wide media monitoring tool.

Stage 2: Partial Consolidation

Share the infrastructure. Keep the independence.

1. Policy RAG Chatbots

8+ implementations across departments

All use RAG architecture, vector database, and LLM access. The only difference is knowledge base content, prompt templates, and department terminology

Recommendation: The registry mentions ISED’s Radia is already being used by “over 40 federal departments” as a proof of concept. The government is trying to consolidate here. But departments like DND (Long Wizard) and RCMP (Polly) are still building their own.

When a scalable, multi-tenant platform like Radia already exists, use it. Every new standalone chatbot is a failure of governance, not technology.

2. Anomaly Detection / Fraud Detection

6+ implementations all using similar ML algorithms

All use similar ML algorithms and training methodologies. The difference is training data, detection thresholds, and business rules

Recommendation: You can’t pool the data. Tax records and border crossings should stay siloed. But you can pool the math. Share the MLOps pipeline (the code that trains the models), even if the data stays separate.

3. OCR / Document Digitization

7+ implementations across departments

All use similar OCR engines and handwriting recognition models. The difference is document types, field extraction rules, and quality thresholds

Recommendation: Build one document scanning service and let each department configure what fields to extract. Fish slips and census forms can run on the same OCR engine.

4. Correspondence Drafting Tools

6+ implementations all drafting correspondence

All use similar LLM access, drafting templates, and tone guidance. The difference is ministerial style guides, subject matter expertise, and approval workflows

Recommendation: Share the drafting tools but keep the style guides separate. Minister A can still sound like Minister A.

Stage 3: Keep Independent

Before this sounds like a pure efficiency play, some fragmentation is rational. These redundancies exist for valid legal, security, or ethical reasons.

1. Legal AI (3+ implementations) Solicitor-client privilege creates real walls, not just organizational ones. Legal advice given to one department cannot be accessible to others.

2. Immigration AI (15+ implementations at IRCC) Immigration decisions carry legal weight and appeal rights under department-specific legislative authority that can’t be shared.

3. Law Enforcement AI (8+ implementations at RCMP) Criminal investigations require compartmentalization due to evidence chain of custody, legal admissibility, and Protected B+ classification.

4. Indigenous Data (3+ implementations, primarily at ISC) OCAP principles require that First Nations data be owned and controlled by Indigenous communities, and trust relationships matter more than platform efficiency.

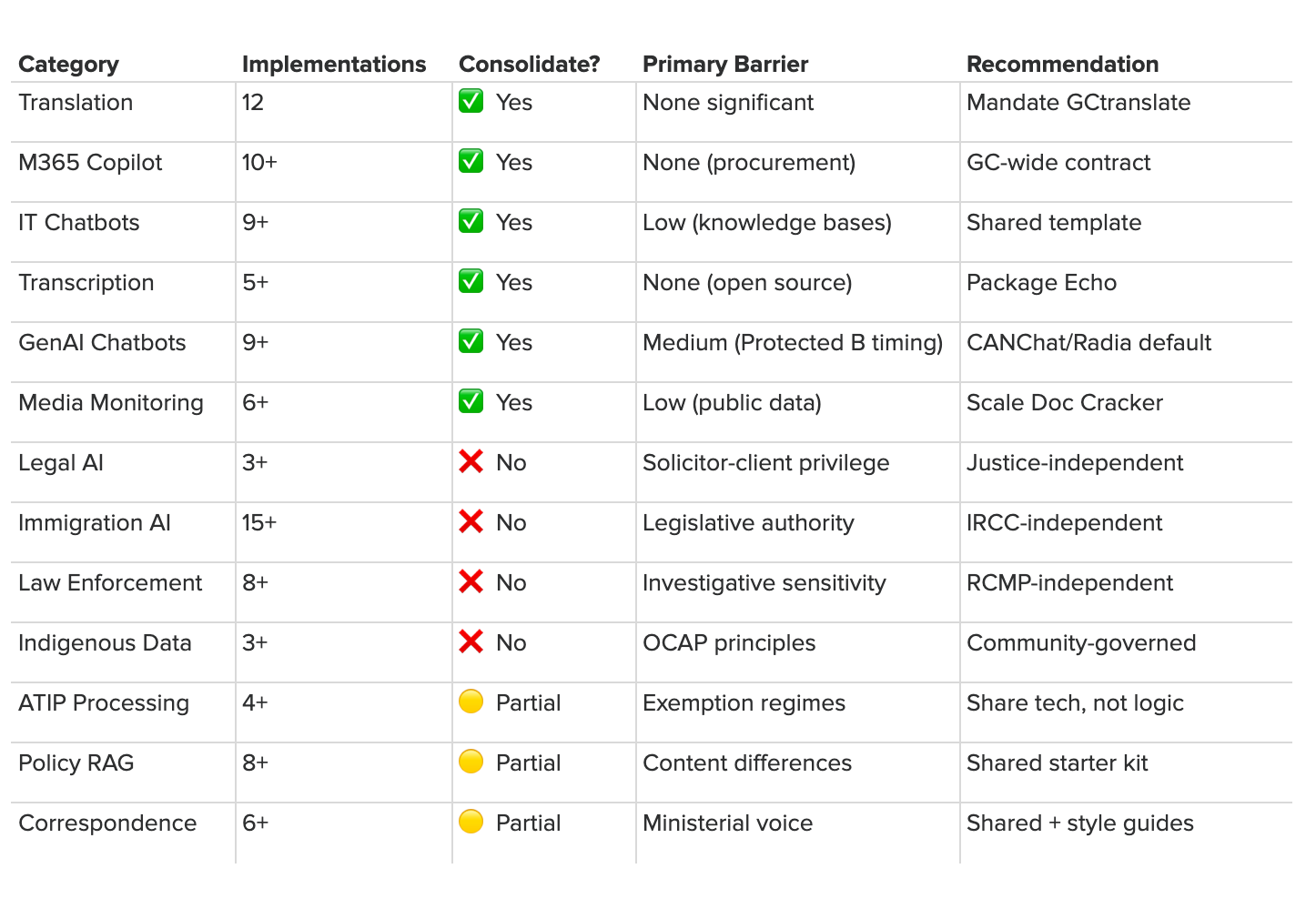

The Consolidation Matrix

The Payoff

If 20 departments spend $2M each on custom chatbot R&D + cloud hosting + maintenance, that’s $40M right there. Add in the premium paid for individual Copilot licenses vs. an Enterprise bulk deal, and the math holds up.

By treating general-purpose AI infrastructure as a shared utility rather than a departmental silo, the federal government could unlock estimated hard savings of $45 million to $75 million annually.

Consolidation can also unlock faster deployment, smaller departments gaining access to tools they couldn’t build alone, and security inheritance that saves months of approvals.

But I’m just looking at a public dataset from the outside. I’m sure there are things I can’t see.

I can’t see the internal politics. I can’t see which Deputy Ministers will protect their turf and which will welcome consolidation. I can’t see the vendor contracts or the switching costs. I can’t see the dozen pilots that failed and never made it to the Register.

But here’s what I can see: a clear path to doing more with less while the AI race heats up.

Next in this series:

Validated Startup Opportunities Hiding in the Register

Canada’s Unique Talent Advantage in the AI Race

Foreign Dependencies that Should Keep You up at Night